Enterprise leaders face an unprecedented challenge: 85% of organizations recognize event-driven architecture's business value, yet most struggle with implementation complexity that can derail digital transformation initiatives. The gap between recognizing EDA's potential and successfully deploying enterprise-grade systems often determines whether organizations achieve their Technology Transformation goals or fall behind competitors.

At LogixGuru, our 20+ years of enterprise architecture experience has shown us that event-driven systems aren't just technical implementations—they're strategic business enablers. When Netflix processes 1.8 billion daily requests through event-driven patterns, they're not showcasing technical prowess; they're demonstrating how Technology Transformation creates sustainable competitive advantages in real-time markets projected to reach $66.2B by 2033.

This comprehensive guide explores how strategic event-driven architecture implementation, anchored in our proven F.U.T.U.R.E framework's Technology Transformation principles, enables enterprises to build scalable, resilient systems that drive measurable business outcomes.

Forward-Thinking Customer Understanding: The Business Case for Event-Driven Systems

Modern enterprise customers demand instantaneous responses across digital touchpoints. Whether processing financial transactions, managing supply chain disruptions, or delivering personalized experiences, customer expectations have fundamentally shifted toward real-time interactions. Event-driven architecture directly addresses these customer-centric requirements by enabling organizations to respond to business events as they occur, rather than through batch processing or scheduled updates.

Consider the customer impact when systems can process 100,000+ events per second while maintaining sub-second response times. This capability transforms customer experiences from reactive to proactive, enabling predictive maintenance notifications, real-time fraud detection, and dynamic pricing adjustments that directly enhance customer satisfaction and loyalty.

The financial services industry exemplifies this customer-focused approach. When market conditions change, event-driven systems automatically trigger portfolio rebalancing, risk assessments, and customer notifications within milliseconds. This responsiveness doesn't just meet customer expectations—it exceeds them, creating differentiation in commoditized markets.

Technology Transformation: Core Architectural Patterns for Enterprise Scale

Event Sourcing: Building Auditable Business Intelligence

Event Sourcing captures every business state change as an immutable event in a durable log, creating what we term "business truth preservation." This pattern provides enterprises with complete audit trails essential for regulatory compliance while enabling system reconstruction through event replay—a capability that proves invaluable during system migrations or disaster recovery scenarios.

The strategic advantage extends beyond compliance. Event stores become rich sources of business intelligence, enabling organizations to analyze customer behavior patterns, operational efficiency metrics, and market trend indicators with unprecedented granularity. When every business event is preserved and queryable, organizations gain analytical capabilities that traditional database architectures simply cannot provide.

Implementation requires careful consideration of event schema evolution, storage optimization, and replay performance. Our enterprise clients typically see event volumes ranging from millions to billions of events monthly, necessitating robust infrastructure planning and monitoring strategies.

CQRS: Optimizing Read and Write Operations

Command Query Responsibility Segregation separates read and write operations into distinct models, enabling independent optimization and scaling strategies. Write models focus on business logic validation and command processing, while read models serve optimized queries through materialized views tailored to specific user interface requirements.

This separation proves particularly valuable in enterprise environments where read operations often outnumber writes by ratios exceeding 100:1. Organizations can scale read infrastructure independently, implement caching strategies without impacting write performance, and create specialized read models for different user personas or reporting requirements.

The pattern also enables technology diversity within single applications. Write models might leverage relational databases for transaction consistency, while read models utilize NoSQL solutions optimized for query performance and horizontal scaling.

Enterprise Messaging Patterns

Event Carried State Transfer reduces system coupling by embedding relevant state information within events themselves. Rather than requiring downstream systems to query for additional context, events carry sufficient information for processing decisions. This pattern significantly reduces network I/O overhead and eliminates cascading failure risks from synchronous API dependencies.

Competing Consumers enables horizontal scaling by distributing event processing across multiple worker instances. This pattern proves essential for handling variable workloads and ensuring system resilience during peak demand periods. Implementation requires careful consideration of message ordering, duplicate handling, and worker coordination strategies.

Event Bridge patterns connect distributed event brokers across geographical regions or organizational boundaries, enabling fault-tolerant event distribution at global scale. This capability supports multi-region deployments, disaster recovery strategies, and organizational integration scenarios.

Unified Data Intelligence: Transforming Events into Strategic Insights

Event-driven architectures generate unprecedented volumes of business data, creating opportunities for advanced analytics and machine learning applications. Every customer interaction, system state change, and business process execution creates data points that, when properly analyzed, reveal strategic insights about operational efficiency, customer behavior, and market dynamics.

Real-time stream processing enables immediate business intelligence generation. Organizations can identify anomalies as they occur, trigger automated responses to specific business conditions, and maintain real-time dashboards that provide operational visibility across complex distributed systems. This capability transforms data from historical reporting tool to active business intelligence platform.

Event streams also support advanced analytics scenarios including predictive modeling, anomaly detection, and recommendation engines. Machine learning algorithms can process event streams in real-time, enabling dynamic personalization, fraud detection, and operational optimization that adapts continuously to changing business conditions.

The key to maximizing data intelligence value lies in event schema design and stream processing architecture. Well-designed event schemas capture both technical and business context, enabling rich analytical scenarios while maintaining processing efficiency at enterprise scale.

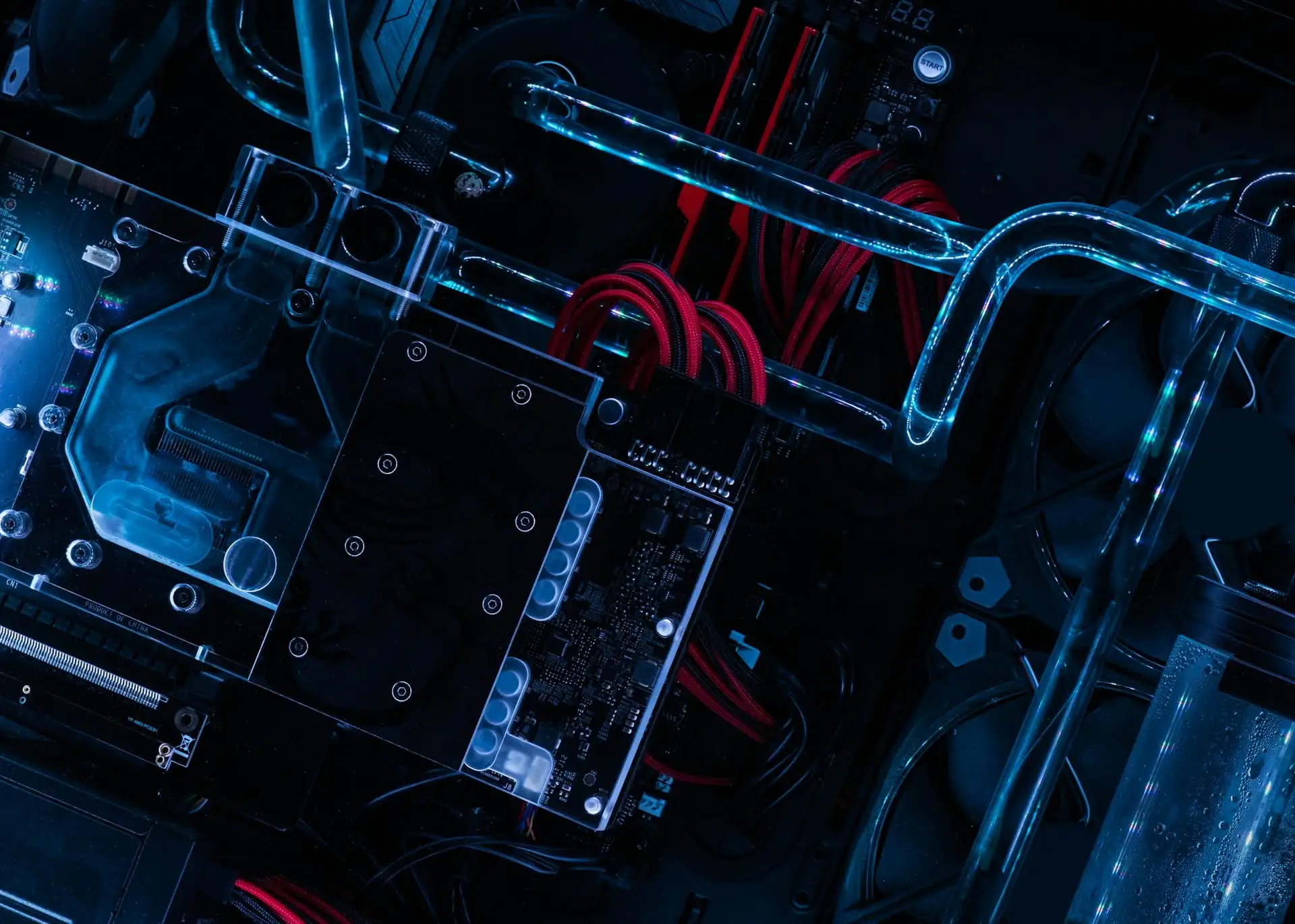

Implementation Architecture: Enterprise-Grade Technology Stack

Event Streaming Infrastructure

Apache Kafka serves as the foundation for high-throughput event streaming, providing durability, scalability, and fault tolerance required for enterprise workloads. Kafka's distributed architecture supports horizontal scaling while maintaining ordering guarantees and at-least-once delivery semantics essential for business-critical applications.

Amazon Kinesis offers managed event streaming for organizations preferring cloud-native solutions. Kinesis integrates seamlessly with AWS ecosystem services, enabling rapid development of serverless event-driven applications while reducing operational overhead.

Stream Processing Platforms

Apache Flink provides stateful stream processing with millisecond latency, supporting complex event processing scenarios including windowing, aggregations, and temporal joins. Flink's exactly-once processing guarantees ensure data consistency in financial and compliance-sensitive applications.

Event processing logic must account for late-arriving events, out-of-order processing, and state management across distributed processing nodes. Proper implementation requires careful consideration of checkpointing strategies, state backend selection, and resource allocation planning.

Enterprise Integration

Modern API gateways like Tyk provide event publishing and routing capabilities that bridge traditional request-response systems with event-driven architectures. This hybrid approach enables gradual migration strategies that minimize business disruption while introducing event-driven capabilities.

Integration patterns must address schema evolution, message versioning, and backward compatibility requirements. Enterprise environments typically require coexistence of multiple event formats and processing protocols during transition periods.

Relationship-Driven Delivery: Partnership Approach to EDA Implementation

Successful event-driven architecture implementation requires collaborative partnership between business stakeholders and technical teams. Business domain expertise must inform event schema design, ensuring that technical events accurately represent business concepts and support future analytical requirements.

Our partnership approach emphasizes iterative development with continuous business validation. Rather than implementing complete EDA solutions in isolation, we work closely with client teams to identify high-value use cases, prototype solutions, and gradually expand event-driven capabilities across organizational boundaries.

Change management becomes particularly critical in EDA implementations. Traditional synchronous processing patterns require different thinking models than asynchronous event-driven approaches. Training and knowledge transfer ensure that development teams understand both technical implementation details and business implications of architectural decisions.

Long-term success depends on establishing governance frameworks for event schema evolution, performance monitoring, and operational procedures. These frameworks enable organizations to maintain system reliability while continuously evolving business capabilities.

Enterprise-Grade Execution: Measuring Success and Scaling Operations

Performance Metrics and Monitoring

Event-driven systems require comprehensive monitoring across multiple dimensions including throughput, latency, error rates, and business metrics. Traditional application monitoring approaches must expand to include event flow analysis, stream processing performance, and end-to-end business process visibility.

Key performance indicators should align with business objectives rather than purely technical metrics. Customer experience improvements, operational efficiency gains, and revenue impact provide meaningful measures of EDA implementation success.

Operational Excellence

Production event-driven systems require robust operational procedures including event replay capabilities, schema migration strategies, and disaster recovery planning. These capabilities ensure business continuity while enabling system evolution and maintenance activities.

Scaling considerations must address both technical and organizational dimensions. As event volumes grow, infrastructure scaling must occur alongside team scaling and operational procedure refinement. Successful organizations establish centers of excellence that provide guidance and best practices across multiple EDA implementations.

Continuous Evolution

Event-driven architectures enable continuous business capability evolution through event replay and A/B testing scenarios. Organizations can test new business logic against historical event streams, enabling risk-free experimentation with business rule changes and process optimizations.

This capability supports agile business development approaches where new features and business capabilities can be developed, tested, and deployed with minimal risk to existing operations.

Strategic Partnership for Technology Transformation Success

Event-driven architecture implementation represents a fundamental shift in how organizations design, build, and operate business systems. Success requires more than technical expertise—it demands strategic partnership that aligns technology capabilities with business objectives while managing organizational change effectively.

LogixGuru's Technology Transformation approach combines deep technical expertise with proven methodologies for managing complex enterprise implementations. Our 20+ years of experience enables us to guide organizations through EDA adoption while avoiding common pitfalls that can derail digital transformation initiatives.

Ready to explore how event-driven architecture can accelerate your Technology Transformation? Our enterprise architecture team offers comprehensive assessments that identify high-value EDA opportunities within your current technology landscape. We provide strategic roadmaps, proof-of-concept development, and implementation guidance that ensures successful adoption of event-driven patterns.

Contact our advisory team to schedule a Technology Transformation consultation focused on your specific business requirements and organizational context. Together, we can design event-driven solutions that deliver measurable business value while building foundation capabilities for future innovation.